Google I/O 2018 - My Highlights

2018-05-25

My highlights from attending Google IO in person.

This article was first posted on Medium.com. Click here to check out the original!

Google I/O 2018 Shoreline Amphitheatre Mountain View CA

I was fortunate enough to attend the annual Google Developer conference in Mountain View this year, and got to see a lot of awesome announcements as well as meeting a ton of interesting people who work in tech. In this post I’ll cover some of my personal highlights of the 3 day event.

The Keynote

Once again Sundar Pichai delivered a keynote jam-packed with new and shiny tech innovations. The talking point of the entire event was that unnerving phone call made by Google Duplex, if you haven’t already checked it out you can watch it here.

Sundar delivering a very sunny keynote

Similarly to last year Google are still putting AI into everything from Maps to Photos, and even to the screen brightness management will use ML to learn your preferences on Android P. One highlight for me was the focus on “Digital Wellbeing” — the approach to helping users spend less time on their devices. It was impressive to see this come straight from Google and I can’t wait to see other companies introduce new features to contribute to this concept.

Android

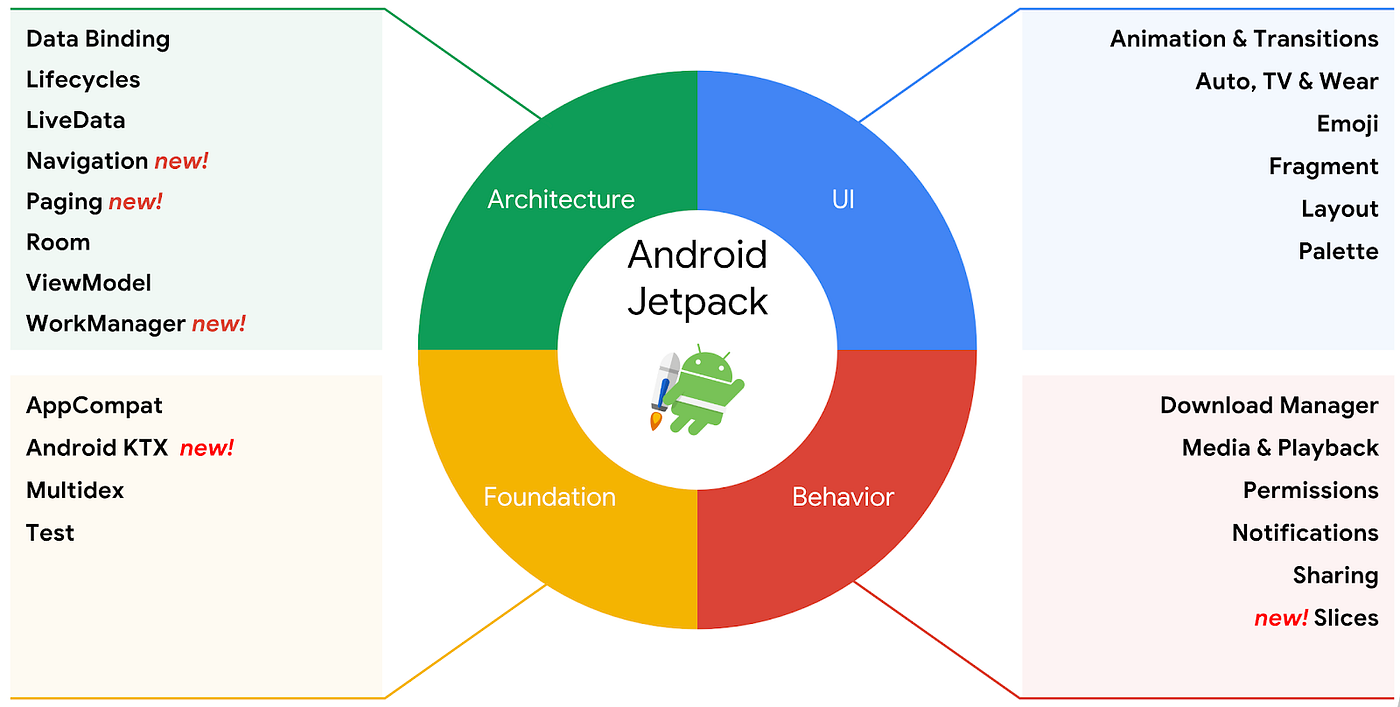

Since the inception of the Android operating system Google has never prescribed an architecture pattern to follow. This has caused a fair bit of confusion and I’ve worked on multiple Android codebases that follow MVP, MVVM, MVI and sometimes no patterns at all! Android Jetpack aims to rectify this by taking the architecture components (announced last year) and promoting them as an opinionated architecture.

https://android-developers.googleblog.com/2018/05/use-android-jetpack-to-accelerate-your.html

I’m particularly excited for the new Navigation component, which simplifies app navigation and comes with a nice visual tool in Android Studio (think Xcode Storyboards). Also, WorkManager looks like it’ll make life much easier in terms of handling background services such as uploading data. All in all I think Jetpack will set a good standard for architecting future Android apps.

Slices are an interesting new Android feature where the developer is able to surface some app functionality within the Google Assistant. These seem pretty straight forward to create (dev docs here) and are supported all the way back to Android 4.4!

AndroidX will eventually replace the support library. This is good news as we won’t have to maintain v4 or v7 versions of different support libraries. Essentially this introduces a guarantee for supporting multiple operating systems and should hopefully make our lives as Android developers a lot easier.

Android App Bundles are a new form of app distribution. Instead of serving every screen size, locale and device type the Play store will only download what is required. This means less assets to bundle resulting in a smaller overall APK and ideally more downloads. LinkedIn have been using Android App Bundles and their APK size has decreased by 23%! Using similar technology will allow developers to ship dynamic features, meaning that if a feature is only required by a certain subset of users it will not be downloaded for everyone, once again resulting in smaller APKs.

Artificial Intelligence

ML Kit allows iOS and Android devs to make use of Google machine learning resources on their devices. You can make use of these APIs locally or in the cloud thanks to Firebase and it comes ready to go with some pre-set models. At this moment in time the library supports text recognition, image labeling, barcode scanning, face detection, and landmark recognition.

You can find ML Kit on the Firebase Dashboard

Augmented Reality

When ARCore was announced last year I eagerly dived into the samples with the intention to be an early adopter of this emerging technology. I was deflated once I realised that I would have to write OpenGL code and a graphics programmer I am not. With this in mind I was delighted to welcome the Sceneform SDK which allows you to user plain Java (or Kotlin) to write AR applications. This makes it way more accessible for Android devs to try their hand at building Augmented Reality experiences and Google have provided a few impressive samples including our very own Solar System.

Check out our Solar System in AR!

Cloud Anchors allow for multi-user AR experiences across both Android and iOS. This opens up so much potential for AR as a medium now that users can collaborate within the same virtual space. You can check out the Codelab to try Cloud Anchors out for yourself, it’s a lot of fun!

Design

When Material Design was first released back in 2014, it didn’t take off quite as Google had hoped. The guidelines were too strict and resulted in apps and websites that all looked identical barring a different colour scheme for each.

The latest Material Design changes this with a set of guidelines that allow for flexibility, customisation and the ability to create a consistent yet unique brand identity. Along with pre-built material components for Android, iOS, Web and Flutter, Google have released a Sketch Plugin which allows you to easily tweak your design palate. Changing any of these values will propagate the changes across all of your layouts. Another tool for designers is Gallery which seems to be a free alternative to Zeplin and has its own native Android and iOS apps. I can’t wait to see some new material designs appear over the coming months.

Shrine a material design case study from Google

Flutter

I actually took a very shallow dive into Flutter the week preceding I/O. As someone who’s worked with React Native I was keen to try out Google’s own cross-platform solution. You can read the article here and to be honest I wasn’t completely sold on either Flutter or the Dart programming language that it uses.

However, after speaking to the development team, testing out some sample apps and participating in some Codelabs it’s safe to say I have been convinced. Google has done a tonne of groundwork by creating ready to use widgets as well as integrated tools that work nicely with Android Studio and Visual Studio Code. From the sheer amount of Google employees in Flutter t-shirts it seems that they’re pushing the platform pretty heavily. With the release of Flutter Beta 3 it seems that there’s a huge push to get developers shipping apps into production. I’ll keep an eager eye on the platform and I’m interested to see if Google publish an app using the SDK within the next year.

See you next year Droid!

Wrapping Up

I’ve covered just some of the announcements made at I/O this year, I encourage you to check out the recap to see all of the sessions. Feel free to leave a comment to let me know what you think, and fingers crossed I’ll see you next year!